The CurrentWhat is AGI, and will it harm humanity?

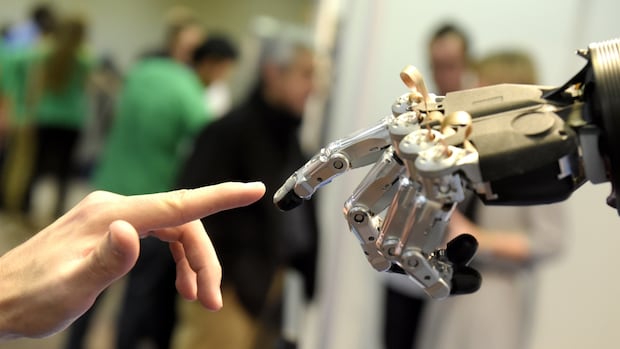

These days many people use artificial intelligence (AI) chatbots for everything from dinner suggestions to battling loneliness, but could humanity be on the cusp of creating machines that can think for themselves — and potentially outthink their creators?

Some big tech companies say that kind of breakthrough, referred to as general artificial intelligence (AGI), is just a few years away. But skeptics say you shouldn’t believe the hype.

“Whenever you see someone talking about AGI, just [picture] the tooth fairy, or like Father Christmas,” said Ed Zitron, host of the tech podcast Better Offline and creator of the Where’s Your Ed At? newsletter.

“These are all fictional concepts, AGI included. The difference is you have business idiots sinking billions of dollars into them because they have nothing else to put their money into,” he told The Current.

Experts disagree on the fine details of what counts as AGI, but it’s generally considered to be an artificial intelligence that is as smart or smarter than humans, with the ability to learn and act autonomously. That intelligence could then be installed in a robot body that could complete a multitude of tasks.

WATCH | Google’s humanoid robot can pack your lunch:

Demis Hassabis, CEO of Google’s AI lab DeepMind, recently said that his company will achieve AGI by 2030.

“My timeline has been pretty consistent since the start of DeepMind in 2010, so we thought it was roughly a 20-year mission and amazingly we’re on track,” he told the New York Times in May.

Zitron isn’t convinced. He said that Hassabis is “directly incentivized” to talk up his company’s progress, and pointed to uncertainty about the profitability of AI chatbots like Google’s Gemini or OpenAI’s ChatGPT.

“None of these companies are really making any money with generative AI … so they need a new magic trick to make people get off their backs,” he said.

AGI ‘always 10 years away’

AI expert Melanie Mitchell says people have been making predictions about this kind of intelligent AI since the 1960s — and those predictions have always been wrong.

“AGI or the equivalent is always 10 years away, but it always has been and maybe it always will be,” said Mitchell, a professor at the Santa Fe Institute in New Mexico who specializes in artificial intelligence, machine learning and cognitive science.

She said there isn’t a universal agreement about what a working AGI should be able to do, but it shouldn’t be confused with large language models like ChatGPT or Claude, which are a type of generative AI.

Large language models (LLMs) that power generative AI programs have been trained on “a huge amount of human-generated language, either from websites or from books or other media,” and as a result are “able to generate very human-sounding language,” she told The Current.

Zitron said that distinction is important, because it highlights that “generative AI is not intelligence, it is calling upon a corpus of information” that it’s been fed by humans.

He defines AGI as “a conscious computer … something that can think and act for itself completely autonomously,” and has the ability to learn.

“We do not know how human consciousness works,” he said. “How the heck are we meant to do that with computers? And the answer is, we do not know.”

Mitchell worries that without a widely agreed definition, there’s a risk that big tech companies will “redefine AGI into existence.”

“They’ll say, ‘Oh, well, what we have here, that’s AGI. And therefore, we have achieved AGI,’ without it really having any deeper meaning than that,” she said.

AGI could be ‘a suicide race’

There are people outside the tech industry who believe AGI could be within reach.

“If our brain is a biological computer, well, then that means it is possible to make things that can think at human level,” said Max Tegmark, a professor at MIT and president of the Future of Life Institute, a non-profit that aims to mitigate the risks of new technologies.

“And there’s no law of physics saying you can’t do it better,” he said.

Tegmark thinks there’s a hubris or excessive pride in asserting that AGI can’t be achieved, in the same way that many people once thought human flight was impossible.

Some early inventors had tried in vain to create machines that mimicked the rapid wingbeats of smaller birds. But success came with a greater understanding of birds’ wings, and the idea of a machine that glides instead.

“It turned out it was a much easier way to build flying machines,” Tegmark said.

“We’ve seen the same thing happen here now, that today’s state-of-the-art AI systems are much simpler than brains. We found a different way to make machines that can think.”

Tegmark says he wouldn’t be too surprised if “it happened in two to five years,” but that doesn’t mean we necessarily should create robots that can outthink humans.

He described these intelligent machines as a new species, one that could easily threaten humanity’s place in the food chain “because it’s natural that the smarter species takes control.”

A group of Calgary high school students is offering free courses on artificial intelligence to younger students. The classes are open to kids in grades seven to 10, take place at the University of Calgary library, and aim to teach students how to responsibly use AI tools like ChatGPT.

“[The] race to build superintelligence is a suicide race, but we don’t need to run that race,” he said.

“We can still build amazing AI that cures cancer and gives us all sorts of wonderful tools — without building superintelligence.”